Let's dive right into the world of the best Zyte alternatives. You're probably here because you've realized that while Zyte is great, there might be something else out there better suited for your specific web scraping and data extraction needs.

We'll guide you through some top-notch alternatives that could give your projects a new edge. By exploring options with robust features or understanding how enhanced proxy services can safeguard your data collection activities, this article aims to equip you with valuable insights.

Moreover, we'll look into utilizing unseen browsers for interactive material and refining your data gathering endeavors with the latest innovations. After reading, expect to walk away more informed about making an intelligent choice among the vast sea of scraping tools available today.

What Does Zyte Do?

Zyte (formerly known as ScrapingHub) is a cutting-edge data extraction tool that specializes in turning the web into actionable data with its sophisticated web scraping technologies. At its core, Zyte provides businesses and developers alike the power to automate the collection of web data at scale.

This capability is crucial for customer support teams, sales professionals, remote workers, tech workers, and those in various office jobs seeking to leverage big data for competitive insights, market research, price monitoring, or even lead generation. 80% of businesses consider web scraping essential for competitive analysis. It has a big impact, too: web scraping can reduce data collection time by up to 70%. But you can only get this time savings if you're using the right web scraping tool. Finding web scraping alternatives is key if Zyte just isn't cutting it.

Key Features of Zyte

Automated Web Scraping: One of Zyte's standout features is its ability to automate the process of extracting data from websites. This means you can gather information 24/7 without manual intervention.

Data Extraction at Scale: Whether you need information from a handful of pages or millions across different websites globally, ZYTE's infrastructure supports large-scale operations seamlessly.

Rapid Integration: With easy-to-use APIs (Application Programming Interfaces), integrating extracted data into your systems or workflows becomes hassle-free.

Clean and Structured Data Output: The platform ensures that the collected data is not only accurate but also delivered in an organized manner suitable for immediate use or analysis.

Timely access to relevant information is clearly a significant advantage. So Zyte helps by simplifying complex processes involved in obtaining critical web-based knowledge. From enhancing customer support strategies through better understanding competitors’ offerings to optimizing sales efforts by identifying potential leads more efficiently - the applications are vast and varied.

The essence of what makes Zyte remarkable lies not just in its technology but how it enables businesses across sectors to make informed decisions swiftly. By automating tedious tasks associated with gathering internet-sourced intelligence while ensuring high-quality outputs, Zyte stands out as a pivotal tool within any organization’s arsenal aiming towards operational excellence powered by robust AI-driven insights.

Understanding the Need For Zyte Alternatives

Navigating the online world requires swift and precise harvesting of web information. With businesses increasingly relying on this, finding top-notch Zyte alternatives becomes essential. When looking for alternatives to Zyte, people might be motivated by several reasons that reflect specific needs, challenges, or preferences in web scraping projects. Here are some common reasons:

Cost and Budget Constraints: Zyte's pricing model may not fit every user's budget, especially for startups, individuals, or small projects where cost efficiency is a priority. Users might look for more affordable alternatives that still meet their basic web scraping needs.

Ease of Use: Users with less technical expertise or those seeking a more straightforward setup might find Zyte's platform and its features complex. Alternatives that offer a simpler user interface or require less programming knowledge could be more appealing.

Lack of Specific Features or Functionalities: Some users may have unique requirements not fully addressed by Zyte, such as specific data extraction capabilities, integration with other software tools, or advanced analytics features. They might search for alternatives that offer these particular features.

Performance and Speed: If users experience performance issues with Zyte, such as slow data extraction or difficulties in handling large volumes of data efficiently, they might look for faster or more optimized solutions.

Need for More Customization and Flexibility: Users requiring higher levels of customization for their web scraping processes or those who need to adapt the tool closely to their workflow might find Zyte lacking and seek out alternatives that offer greater flexibility.

As noted by one G2 reviewer:

Over our time working together, we did encounter some re-occuring inaccuracies, issues, and errors. At times it felt like we were the ones that would be up raising an alarm, and that we were catching a lot of errors on our own. However - I do feel that toward the end of our working relationship - this had be remedied and we had worked through all issues and things were running smoothly.

The Best Zyte Alternatives for 2025

Pinpointing the right tools for harvesting and distilling web information becomes crucial. If you're on the hunt for something different from Zyte, we've got a lineup of top-notch options that bring their own special flair, robust capabilities, and adaptability to the table.

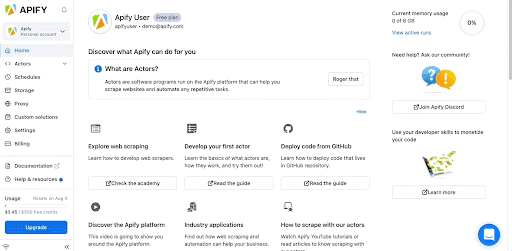

1. Apify

Features:

Offers a scalable web crawling and scraping service for developers.

Provides a library of pre-made actors (scrapers) for common use cases.

Enables automation of workflows with JavaScript.

Cloud-based storage and computing resources, accommodating large-scale data extraction projects.

As one reviewer noted on G2:

You get a chance to fully try the product! it's easy to use when you know what to do, and there is a great community around willing to help you.

2. Magical

The best on our list (and yes we know we're biased) for a number of reasons. Magical is a free Chrome extension that can easily scrape any webpage and transfer it to any spreadsheet you choose.

Simple no code interface

Easy to set up and use

Just point and click at the info you want to scrape

You can use it on LinkedIn or any place you need to scrape customer profiles

As one user noted on G2:

It allows me to prefabricate answers and I use it every time. It is easy to set up and it makes workflow so much better.

3. Scrapy

Features:

Open-source and free to use.

Highly flexible and customizable, ideal for complex scraping needs.

Extensive community support and documentation.

Built-in support for exporting data in various formats and storing it in multiple types of databases.

4. Beautiful Soup

Features:

A Python library for parsing HTML and XML documents.

Works well with Python's request library to access web content.

Easy to use for simple web scraping tasks.

Ideal for projects that need to parse and manipulate HTML content.

G2 reviewer Amanda says:

Even for those starting with programming, beautifulsoup4 is easy to understand, the commands are simple, and there are lots of tutorials, examples, and optimization tips online. Although it's easy to use, when you are working with a complex project, beautifulsoup4 becomes harder to use. Pagination, older and bad formatted websites and lack of a way to run more than one process are good examples.

5. Octoparse

Features:

User-friendly interface with a point-and-click tool.

Cloud-based service, allowing for data scraping without needing to manage infrastructure.

Offers both free and paid versions.

Advanced features include scheduled scraping and API access.

On a G2 review, user Paola says:

When you do not have much prior programming knowledge and you need to extract data from a website, this software becomes an efficient tool since it is easy to use and is very intuitive; with just a few clicks, you already have a data extraction flow.

6. ParseHub

Features:

Supports complex websites with JavaScript, AJAX, cookies, etc.

Provides a visual editor for selecting elements.

Offers cloud-based service for running the scraping projects.

Available on both free and paid plans, with support for API access.

G2 reviewer Ricardo shares:

ParseHub makes the dirty work, all the things that you used to do manually to collect the information on a website, now is automatic with this solution, the best for bulk information collection. I think ParseHub needs to be a little bit more intuivite, a little bit more more user friendly, some times the steps are redundant and you need to do everything all over again.

7. Diffbot

Features:

Uses advanced AI and machine learning to extract data.

Can automatically recognize and categorize different types of pages (products, articles, etc.).

Offers a range of APIs for different data types.

Suitable for large-scale and complex data extraction needs.

G2 reviewer Justin remarks:

Overall, Diffbot's tools are simple to use and understand outside of more complex use cases. We use several of their features to deliver content insights to our clients. I would recommend Diffbot to any person or organization that needs to pull large amounts of data from arbitrary web sources.

8. Import.io

Features:

Offers a web-based tool for creating datasets by pointing and clicking on the data you want to extract.

Provides integration with hundreds of web APIs.

Features include real-time data extraction, large-scale scraping, and data transformation capabilities.

Aimed at users needing data for business intelligence and analytics

One Capterra reviewer shares:

Import.io gives you the ability to generate custom data through web scraping. Trying to do this with open source tools is possible, but not easy. Import.io makes it much easier if you're not a programmer or sysadmin, and the pricing is reasonable. Some workflows could have better UI: sometimes there can be a lot of clicking for repetitive and simple tasks, especially on websites with many pages.

9. WebHarvy

Features:

Visual point-and-click interface.

Automatic identification of data patterns in web pages.

Support for scraping from multiple pages, categories, and keywords.

Built-in proxy support and captcha solving.

G2 reviewer Edward notes:

The Simplicity Of Not Needing To Know Or Understand Coding & Such Complicated Applications such as Python.. Plus Mathew & Virat are Always To My Rescue With Professionalism That Is Beyond Words That Can Describe, When Ever I Am In A technical Jam They Have Not Been Unable To Find Me A Solution To My Problem - They Are Both Worth there Weight In Gold ! Thank You For Providing Both There Service ! Other Wise I Would Not Have Been Successful With This Product At All!

10. BrightData

Features:

Has an extensive proxy network.

Works with a large proxy pool to ensure that businesses can access real-time data from anywhere without being blocked or facing CAPTCHA solving challenges.

G2 reviewer Shane says:

Bright Data provides an unique web/HTML content collection and processing platform, its great geo and network coverage always provide great value for research projects. It is also very easy to use, once your proxy is setupped, the example commandline can be a very practical bootstrap to be one part of your project. While I am working one my project, I need to fine tune the proxy script daily, and it works very well. It is also very easy to integrate the proxy with other tools, such as selenium or other dummy browsers. The best part is the customer support, the technique support is very timely and knowledagable about their product, and can always provide great suggestions.

11. Oxylabs

Features:

High-quality resilient proxies tailored for complex scraping needs.

Automatic proxy rotation

Prioritizes the precision and caliber of the web data it retrieves.

G2 reviewer Sam says:

We send just short of 1 billion requests per month through Oxylabs and 99.99% of the time, it just works. In some cases we have seen subnets picked up immediate as blacklisted, more flexibility here to test and switch subnets would be appreciated. I feel there could be better tooling when it comes to routing, we built our own round robin layer over the Oxylabs subnets, would love to see things like this built into the product rather that put on the user.

12. ZenRows

Features:

Streamlined API that opens the door for those without tech savvy to dive into data extraction effortlessly.

Can smoothly funnel data in structures such as JSON right into tools like Excel or online storage systems.

In a G2 review, user Mariam says:

What I like the most about ZenRows is the great documentation and the support team, which quickly resolves any issues. It offers reliable scraping and high availability. All this makes ZenRows a must-have for web scraping.

Maintaining Legal Compliance in Web Scraping Activities

When it comes to web scraping, staying on the right side of data privacy laws is a must. These rules guarantee that your methods of gathering data honor the agreement of users and advocate for ethical practices in data collection. But navigating these waters can be tricky.

Data Privacy Laws

Legal stipulations can turn into a convoluted endeavor for companies delving into data extraction, as regulations fluctuate significantly from one area to another. For example, Europe's General Data Protection Regulation (GDPR) sets stringent guidelines on how personal data should be handled, requiring explicit consent from individuals before their information can be processed. This means that if your scraping activities touch upon any European users' data, you need to have clear permissions in place.

In the US, while there isn't an overarching federal law akin to GDPR yet, various state-level laws like California's CCPA introduce similar considerations for businesses collecting or handling personal information of its residents. Understanding and adhering to these varied requirements is crucial not only for legal compliance but also for maintaining trust with your customers and users.

Responsible Data Collection

To align with best practices in ethical web scraping and avoid potential legal pitfalls, adopting transparent methods is key. Always inform website owners about your intentions by seeking permission through their robots.txt file or directly reaching out when necessary—this step alone can significantly mitigate risks associated with unauthorized access or data breaches.

Moreover, employing techniques such as IP rotation through proxy services can help minimize disruption to targeted websites while ensuring smoother operation during your extraction process without crossing into unethical territory by overloading servers or bypassing anti-scraping measures unethically.

Which Alternative Will You Choose?

After exploring the terrain of the best Zyte alternatives, equipped with knowledge, you're poised to make your selection. Choosing the right web scraping tool is crucial for efficiently navigating the vast landscape of online data. By evaluating the distinct functionalities, scalability options, and support provided by these alternatives, you can choose a web scraping tool that not only fits your current requirements but also adapts to your growing and changing data collection needs.

Magical, while not a full fledged web scraper, is very effective at collecting data from any webpage you give it. It's perfect for companies that want something easy to set up and use and can help them with other repetitive tasks. Try it today and find out why over 650,000+ people are saving 7 hours a week on average.